Lupine Publishers| Advances in Robotics & Mechanical Engineering (ARME)

Abstract

Through the usage of fifteen noteworthy ventures by the International Seismological Bureau, world has fabricated a seismic observing system, which makes all local and global seismic information that can be observed to published on a week after week for the client download. Given the immense measure of data on this information, Hadoop stage has possessed the capacity to oversee and capacity productively, and to break down more significant data. It has received appropriated storage to enhance the literacy rate and grow the capacity limit, also it has utilized MapReduce to coordinate the information in the HDFS (Hadoop Distributed File System) to guarantee that they are broke down and prepared rapidly. In the interim, it likewise has utilized excess information stockpiling to guarantee information security, in this way making it an instrument for taking care of extensive information.

Introduction

Seismic data are the data extracted from the digital readings of seismic waves. Seismic waves are similar to the recorded echoes what we make on the top of rigged cliff. The only difference is that these seismic waves propagate downwards. In our modern society, information increases in high speed and a large amount of data resides on cloud platform. Over 1/3rd of total digital data are produced yearly which needs to be processed and analyzed. Hugelive digital data like seismic data, where even a small amount of information impacts greatly to human life has to be analyzed and processed to obtain more valuable information [1]. Thus, Hadoop ecological system comes into picture, which is easy to develop & process applications of mass data, has high fault tolerance nature, being developed on java platform and an open source, and ensures deployment of system [2].

Hadoop Architecture

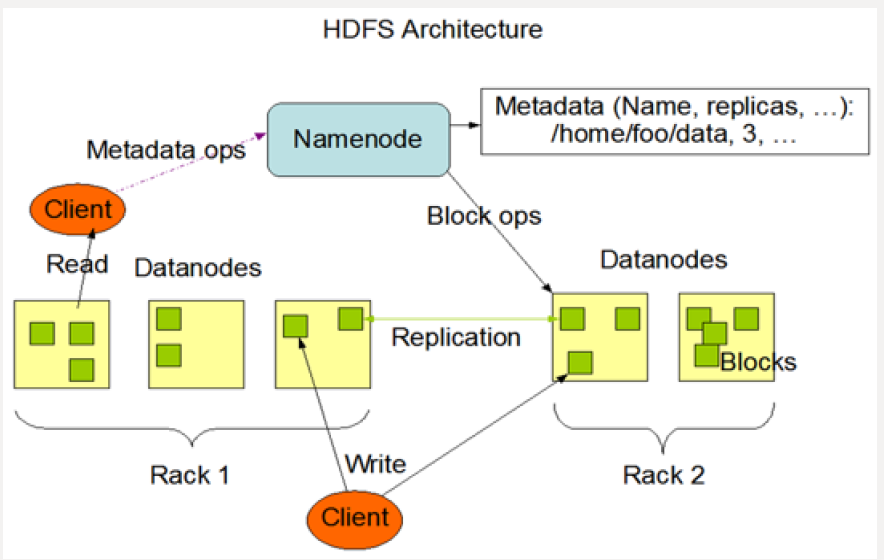

Hadoop supports a traditional hierarchical file organization. HDFS & MapReduce are 2 cores of Hadoop. The Base support of Hadoop is Distributed storage through HDFS and the Program support of Hadoop is Distributed Parallel processing through MapReduce. This HDFS architecture is developed with features like high fault tolerance, expansibility, accessibility, high throughput rate to meet the demand of stream mode and processing superlarge files, which can run on cheap commercial servers. It is Master/ Slave architecture [3].

Master:

a) It has one Name Node (NN).

b) It manages namespace of file system and client’s access operation on file

c) It is responsible for processing namespace operation of file systems (open, close, rename etc.) and also mapping of blocks to Data Node (DN).

Slave:

a) It has several data nodes i.e. one per node in a cluster.

b) It manages storage data.

c) It is responsible for processing file read-and-write requests, create, delete and copy the data block under unified control of NN.

d) The presence of single node NN in a cluster extraordinarily streamlines the structural design of the framework.

e) NN acts like repository for all HDFS metadata.

f) System is designed that never ever the user data flows through NN.

MapReduce Architecture:

a) It is a software structure for effectively composing applications which process immense measure of information like multi-terabytes informational collections in parallel on vast clusters in the sense thousands of nodes of commodity hardware in a dependable adaptation to non-critical failure way [4].

b) A MapReduce job, parts the information into autonomous pieces which are processed by map tasks in a total way.

c) Map task is the input data always is in a key-value pair is sorted by mapper function and resulting key-value pair is fed to reducer.

d) Both input and output undertakings are arranged in a document frameworks and system deals with scheduleling tasks, checking them and re-executing the fizzled tasks [5].

e) MapReduce is a circulated computing with single master node called job - tracker and one slave undertaking tracker per cluster node.

Data Preparing and Processing

MapReduce Architecture:

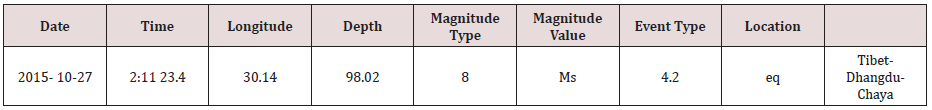

The data is downloaded from China Earthquake Scientific Share Data Centre. Digital data is stored in the form of excel spread sheets which we are going to download. Before the data is being stored in HDFS, the data should be kept in the CSV format. Over 300000 pieces of data are collected by the observation of various earthquake regions all over China since January 1st, 2015, only to record many small earthquakes every day. This paper counts and analyzes the earthquake statistics according to occurrence time and location with the use of MapReduce framework and pseudodistributed platform of Hadoop.

Data Processing

Data processing environment is based on pseudo-distributed platform of Hadoop and its Master/Slave architecture. There are 4 major steps [6];

a) Data pretreatment: download the required data and keep it in .csv format.

b) Store data: store the data set into default input path of Hadoop i.e. bin/hadoopfs –put earthquake_data.csv/usr/input

c) Run the program: locally run the MapReduce program to obtain analysis result.

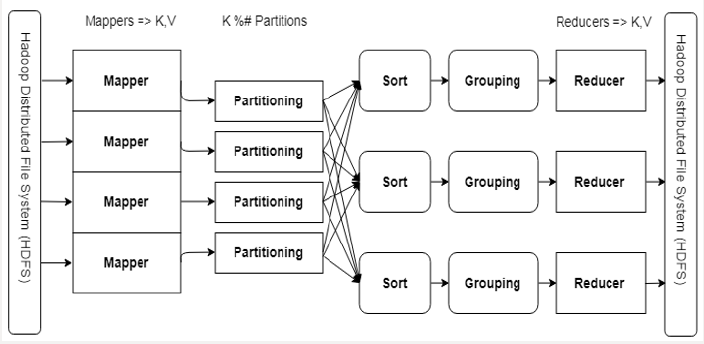

Figure 2: The MapReduce Pipeline.

A mapper receives (Key, Value) and outputs (Key, Value).

A reducer receives (Key, Iterable [Value]) and outputs (Key, Value).

Partioning/Sorting/Grouping provides the Iterable [Value] & Scaling.

d) Check the results: check the operation results in output directory of HDFS (Figures 1-3).

Proposed System

After the collection of required data there are two major steps for implementation. They are;

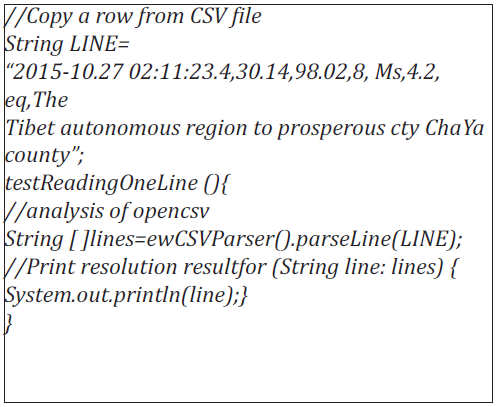

Analysis of CSV file:

a) Excel file looks like a table format but when it is converted to CSV it has only 3 lines.

b) First 2 lines are headers and third line have actual data separated by commas.

c) To analyze this file, this paper has used open source library called “opencsv”, this works like;

The result of the test analysis is shown below;

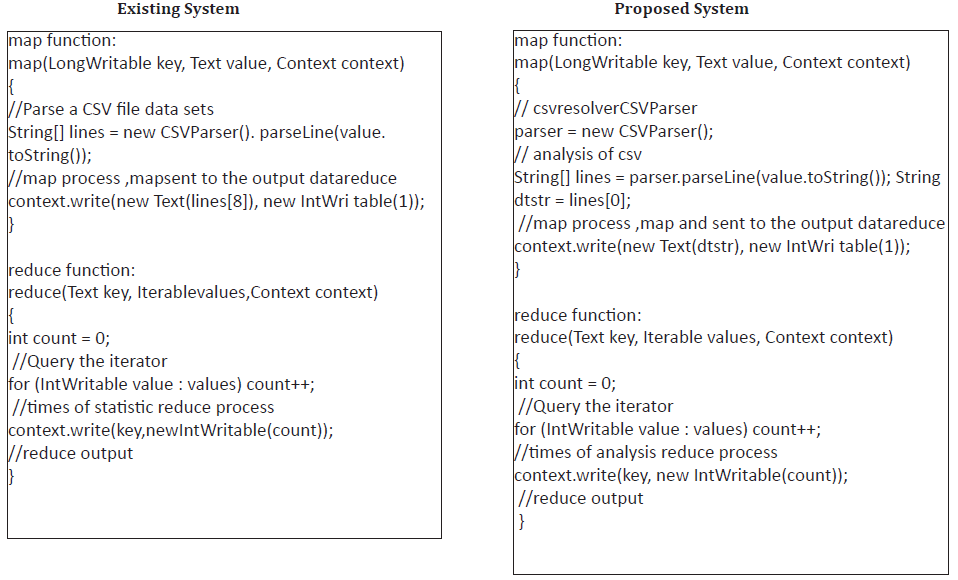

The Processing of Map and Reduce Functions [7]:

The Processing of map and reduce When processing data with MapReduce, firstly the data set file should be led into HDFS file system, and then the program will automatically divide the file into several pieces (default size 64MB) and read line by line [8-10]. Function map will analyze, preset the keyword in advance, and form into intermediate key-value pair. The program will automatically combine the key-value pairs of same key value, several corresponding values packaged in iterator, and the combination has been taken as the input key value of reduce processing. Reduce function accumulates to accumulate intermediate key/value pair which has been outputted at the form of, finally the total times of keyword in data set has been obtained [11].

Experimental Analysis and Results

Environment of Experiment:

a) Hardware configuration – CPU= Intel® Core™ i7- 4510U @2.00GHz 8.00GB of memory.

b) The virtual machine environment configuration [12]: installing OS – Ubuntu12.04.

a) Hadoop version- Hadoop2.7.1

b) IDE – eclipse 4.3.0

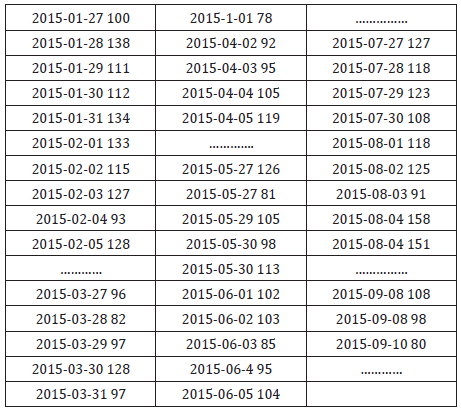

Result: Based on region to region & on daily basis

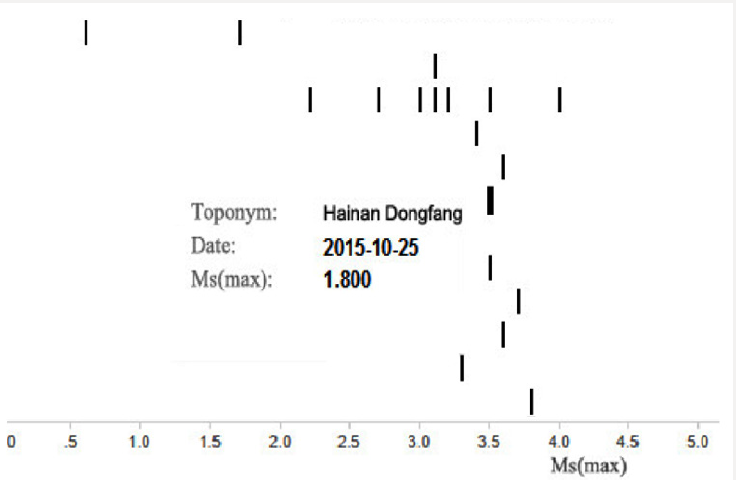

Graphical Representation:

a) Graph on daily basis statistics graph from the data of (Table 2)

b) Regional basis statistics graph from the data of (Table 3)

c) Geographical representation of statistics.

Experimental Analysis and Results

Hadoop is broadly notable system for information investigation for vast datasets that gives execution because of its capability of datasets examination in parallel and distributed environment [13]. Hadoop Distributed File System (HDFS) and the MapReduce are the modules of Hadoop. HDFS is responsible of information stockpiles while MapReduce is responsible of information handling. Tremendous informational index, such as web logs can be handled for investigation by Hadoop [14]. Here the paper utilizes the Hadoop Pseudo disseminated framework stage to break down and deal with the seismic data released by the National Earthquake Monitoring Station. The examination and testing are taken in the Hadoop. In other words, the procedure of Hadoop is taken by isolated Java. Local host node is as the NameNode and DataNode [15]. With the assistance of Hadoop MapReduce, it is conceivable to process the real time huge digital data and analyze effortlessly. It can get the number of the earthquake in all districts from the outcome since 2015, which helps us to think about where the earthquake inclined zones in that period are and furthermore the season of seismic tremor from 2015, which encourages us to know the season of earthquake in a year [16]. It additionally demonstrates the season of earthquake and the level of seismic tremor, in Figure 4.

It can likewise specifically demonstrate the territories of nation from 2015 [17]. The more profound shading implies the more circumstances of seismic tremor there. Else, it can demonstrate the data the biggest level of earthquake and the most profound quake as in the Figure 5. Results are to such an extent that it can be seen easily to fundamental man by its direct section wise portrayal depiction of yield. One can undoubtedly send out Hadoop yield records to few apparatuses like R, Tableau and so on to produce reasonable graphs and report [18]. The investigation made by Hadoop stage is extremely encouraging with higher productivity and down to earth esteem and are anything but difficult to extend. Otherwise, the theory and practical application of Hadoop, yet in addition mirrors the high unwavering quality and productivity of the Hadoop stage to manage information [19]. In outline, the utilization of Hadoop stage to analyze and process huge informational indexes has higher effectiveness and reasonable esteem, and simple to grow [ 20].

Read More About Lupine Publishers Advances in Robotics & Mechanical Engineering (ARME) Please Click on Below Link: https://robotics-engineering-lupine-journal.blogspot.com/

No comments:

Post a Comment

Note: only a member of this blog may post a comment.